What if your infrastructure could think, analyze, and heal itself before you even wake up? AI-powered self-healing systems don't just tell you what happened—they analyze why it happened and fix it automatically when safe to do so.

What if your intelligent infrastructure could proactively monitor, analyze, and heal itself to guarantee uptime before you even wake up?

This is Part 1 of our DevOps automation series, focusing on AI-driven decision making for proactive monitoring and automated remediation. Part 2 will cover deterministic alternatives and hybrid approaches for teams who prefer rule-based automation in production environments.

The Evolution from Reactive to Proactive Monitoring

Traditional uptime monitoring tells you what happened after downtime occurs. AI-powered intelligent infrastructure with proactive monitoring tells you what happened, why it happened, and automatically fixes it to maintain uptime guarantee.

Our previous incident response workflow handled routing and notifications. This new DevOps automation approach takes it further by adding intelligent decision-making and automated remediation capabilities specifically designed for uptime protection in modern application stacks.

How AI-Driven DevOps Automation Guarantees Uptime

The AI-Agent Decision Engine operates on a simple but powerful principle for intelligent infrastructure: Uptime First, Human Intervention When Necessary.

Here's how proactive monitoring with AI-driven decisions protects your uptime:

EMERGENCY_HEALING scenarios:

- Disk usage > 65% (service failure imminent, downtime risk)

- Memory usage > 65% (OOM kill risk, application crashes)

- Single process consuming > 30% CPU for > 5 minutes (runaway process affecting performance)

- Critical services down (nginx, database, PM2 apps causing outages)

NOTIFY_ONLY scenarios:

- Performance degraded but services functional and uptime maintained

- Resource usage elevated but not threatening availability

- Temporary spikes that may self-resolve without downtime impact

- Issues during business hours unless critical to uptime

The intelligent infrastructure doesn't just react to uptime alerts—it analyzes the current system state versus the original alert to make proactive decisions that prevent downtime.

Building Your Intelligent Infrastructure for Uptime Protection

Let's build this DevOps automation step by step using n8n, creating an intelligent infrastructure that can handle PM2 applications, Node.js services, and traditional server infrastructure while maintaining uptime guarantees.

Prerequisites: Setting Up Your Proactive Monitoring Foundation

Before building the intelligent infrastructure workflow, ensure you have:

- Prometheus + AlertManager configured with proper uptime monitoring alert rules

- System monitoring scripts that can analyze current server state for proactive issue detection. I've shared a working script for analyzing system health here: https://github.com/Bubobot-Team/sysadmin-toolkit/blob/staging/scripts/system-health/system-doctor.sh

- SSH access to your servers for automated remediation to maintain uptime

- n8n instance with AI agent capabilities for DevOps automation

- OpenAI API key for intelligent decision making in your monitoring workflow

Here's a sample alert rule for proactive monitoring that triggers our uptime protection workflow:

groups:

- name: self-healing

rules:

- alert: HighCPU

expr: 100 - (avg by (instance) (irate(node_cpu_seconds_total{mode="idle",job="exporters"}[5m])) * 100) > 80

for: 2m

labels:

severity: critical

service: node

annotations:

description: "CPU usage on {{ $labels.instance }} is {{ $value }}%"

alert: PM2AppDown expr: pm2_up == 0 for: 1m labels: severity: critical service: pm2 annotations: description: "PM2 application {{ $labels.app }} is down on {{ $labels.instance }}"

Step 1: Alert Reception and Initial Analysis

The workflow starts with a Webhook node that receives alerts from AlertManager, followed by a Code node that enriches the alert data:

const alerts = items[0].json.body.alerts || [];

return alerts.map(alert => {

const startsAt = new Date(alert.startsAt);

const endsAt = new Date(alert.endsAt);

const hour = endsAt.getUTCHours();

const isBusinessHours = hour >= 9 && hour < 17; // 9 AM–5 PM UTC

const durationMinutes = (endsAt - startsAt) / 1000 / 60; // Duration in minutes

return {

json: {

status: alert.status, // firing or resolved

alertname: alert.labels.alertname, // e.g., HighCPU

severity: alert.labels.severity, // e.g., critical

instance: alert.labels.instance, // e.g., 47.129.163.27:9100

service: alert.labels.service, // e.g., node

description: alert.annotations.description, // e.g., CPU usage description

startsAt: alert.startsAt, // e.g., 2025-05-25T06:40:29.682Z

endsAt: alert.endsAt, // e.g., 2025-05-25T06:42:59.682Z

fingerprint: alert.fingerprint, // e.g., 80e7d055dbb50b48

isBusinessHours: isBusinessHours, // true if within 9 AM–5 PM UTC

durationMinutes: durationMinutes // Duration in minutes

}

};

});

Step 2: AI-Powered Uptime Triage Decision

The first AI Agent acts as an intelligent infrastructure triage system, analyzing whether this is a true uptime threat requiring immediate DevOps automation or just a performance issue requiring notification:

You are an expert SysAdmin AI responsible for making critical decisions about system survival. Analyze the following Prometheus alert and decide whether this is an emergency requiring immediate self-healing action or just a performance issue requiring notification.Alert Details:

- Alert Name: {{ $node["Code"].json["alertname"] }}

- Severity: {{ $node["Code"].json["severity"] }}

- Instance: {{ $node["Code"].json["instance"] }}

- Service: {{ $node["Code"].json["service"] }}

- Status: {{ $node["Code"].json["status"] }}

- Description: {{ $node["Code"].json["description"] }}

- Duration: {{ $node["Code"].json.durationMinutes }} minutes

- Business Hours: {{ $node["Code"].json["isBusinessHours"] }}

Decision Criteria: EMERGENCY HEALING (take immediate action):

- Disk usage > 65% (service failure imminent)

- Memory usage > 65% (OOM kill risk)

- Single process consuming > 30% CPU for > 3 minutes (runaway process)

- Load average > 20 (system unresponsive)

- Critical services down (nginx, database, etc.)

- Any condition that threatens service availability within next 30 minutes

NOTIFY ONLY (inform team but don't act):

- Performance degradation but services still functional

- Resource usage elevated but not critical

- Temporary spikes that may self-resolve

- Issues during business hours unless critical

Your Decision Rules:

- If system survival is at risk → EMERGENCY_HEALING

- If performance is degraded but stable → NOTIFY_ONLY

- When in doubt about service survival → EMERGENCY_HEALING

- Consider business hours only for non-critical issues

Respond with this exact JSON format: { "decision": "EMERGENCY_HEALING|NOTIFY_ONLY", "threat_level": "CRITICAL|HIGH|MEDIUM|LOW", "instance": "The instance (IP address)", "analysis": "Brief analysis of what's happening and why it's critical/non-critical", "immediate_actions": [ { "command": "exact command to execute immediately", "purpose": "what this command does to ensure survival", "safety_level": "SAFE|CAUTION|RISKY" } ], "survival_timeline": "How long until potential service failure (e.g., '30 minutes', 'immediate', 'stable')", "notification_message": "Message to send to Discord explaining the situation and actions taken/recommended", "reasoning": "Why this decision ensures system survival or why notification is sufficient" }

Example AI responses:

Notification only:

{

"decision": "NOTIFY_ONLY",

"threat_level": "LOW",

"analysis": "CPU usage is 10.9%, which is well below critical thresholds for service failure. The alert has already been resolved, indicating that the condition was temporary.",

"immediate_actions": [],

"survival_timeline": "stable",

"notification_message": "Alert HighCPU was triggered for instance 47.129.163.27:9100 indicating a CPU usage of 10.9%. The alert has since been resolved, and there is no immediate need for action.",

"reasoning": "The CPU usage is not at a level that threatens service availability, and the alert is already resolved. Notifying the team will ensure awareness but immediate action is not necessary."

}

Emergency case:

{

"decision": "EMERGENCY_HEALING",

"threat_level": "CRITICAL",

"analysis": "The CPU usage on 47.129.163.27:9100 is at 100%, indicating that the system is completely saturated and could lead to unresponsiveness or service failure.",

"immediate_actions": [

{

"command": "kill -9 $(pgrep -f stress-ng)",

"purpose": "Kill runaway process consuming excessive CPU resources",

"safety_level": "RISKY"

}

],

"survival_timeline": "immediate",

"notification_message": "Critical alert: CPU usage on 47.129.163.27 is at 100%. Immediate action is being taken to kill the runaway process and restore system performance.",

"reasoning": "The critical nature of the CPU usage at 100% poses an immediate risk to system availability."

}

Step 3: Smart Routing and Deep Analysis

An If node routes based on threat level. For CRITICAL alerts, the workflow triggers deep system analysis using SSH to run diagnostic scripts:

bash /opt/sysadmin-toolkit/scripts/system-health/system-doctor.sh --report-json --check-only

This script analyzes:

- Current CPU, memory, disk usage

- Running processes and their resource consumption

- PM2 application status and health

- System load and performance metrics

- Log file sizes and disk space issues

Step 4: Intelligent Remediation Planning

The second AI Agent compares the original alert with current system state and creates targeted remediation plans:

You are an expert DevOps AI responsible for intelligent incident analysis and targeted healing decisions. Compare the original alert with current system state, then provide precise, targeted actions based on actual conditions.ORIGINAL ALERT CONTEXT:

- Alert: {{ $node["Code"].json.alertname }}

- Instance: {{ $node["Code"].json.instance }}

- Severity: {{ $node["Code"].json.severity }}

- Description: {{ $node["Code"].json.description }}

- Duration: {{ $node["Code"].json.durationMinutes }} minutes

- Status: {{ $node["Code"].json.status }}

CURRENT SYSTEM ANALYSIS: Exit Code: {{ $node["SSH - Analyze system"].json.code }} System Doctor Output: {{ $node["SSH - Analyze system"].json.stdout }}

System Errors: {{ $node["SSH - Analyze system"].json.stderr }}

PRODUCTION SERVICES CONTEXT: { "critical_services": ["nginx", "mysql", "redis", "pm2", "nodejs-app"], "pm2_applications": { "api-server": {"instances": 2, "max_memory": "512M", "restart_limit": 3}, "worker-queue": {"instances": 1, "max_memory": "256M", "restart_limit": 2}, "web-frontend": {"instances": 2, "max_memory": "1G", "restart_limit": 3} }, "restart_policies": { "nginx": {"method": "reload", "safe_restart": true}, "mysql": {"method": "restart", "requires_backup_check": true}, "pm2-apps": {"method": "restart", "health_check_required": true, "graceful_timeout": 10}, "stress-ng": {"method": "kill", "safe_to_terminate": true} }, "cleanup_policies": { "temp_file_threshold_mb": 500, "log_rotation_threshold_mb": 1000, "pm2_log_cleanup": true, "node_modules_cache": "/tmp/.npm", "safe_temp_patterns": ["/tmp/.tmp", "/tmp/.log", "/tmp/*.bin"], "protected_files": ["/tmp/mysql.sock", "/tmp/.X11-unix"] } }

DECISION LOGIC FOR PM2 APPS:

- If CPU > 90% AND PM2 process consuming high CPU → MODERATE: Restart specific PM2 app

- If Memory > 85% AND PM2 process memory leak → MODERATE: Restart PM2 app with memory limit

- If PM2 app status "errored" or "stopped" → SAFE: pm2 restart <app-name>

- If PM2 logs showing errors → SAFE: pm2 flush (clear logs)

- If Node.js process unresponsive → MODERATE: pm2 reload <app-name> (zero-downtime)

- If multiple PM2 restarts detected → RISKY: pm2 stop all && pm2 start all

Respond with this exact JSON format: { "situation_assessment": { "alert_vs_reality": "Current system state compared to original alert", "issue_status": "RESOLVED|ONGOING|ESCALATED|CRITICAL", "action_required": "NONE|PREVENTIVE|CORRECTIVE|EMERGENCY", "confidence_level": "HIGH|MEDIUM|LOW", "system_exit_code": "exit code from system analysis", "key_findings": ["list", "of", "critical", "findings", "including", "pm2", "app", "status"], "pm2_assessment": "specific analysis of PM2 applications health" }, "targeted_actions": [ { "action": "specific action based on system doctor findings", "command": "exact command to execute (PM2 commands preferred for Node.js issues)", "target": "specific PM2 app/service/process identified in system doctor output", "justification": "why this specific action is needed based on system doctor data", "risk_level": "SAFE|MODERATE|RISKY", "expected_outcome": "specific result expected", "execution_order": 1 } ], "service_actions": [ { "service": "pm2_app_name or service_name if any issues detected", "action": "restart|reload|stop|start|flush", "reason": "based on system doctor findings and PM2 health assessment" } ], "discord_report": { "status_summary": "Brief status: alert status + PM2 findings + actions taken", "technical_details": "What system doctor found about PM2 apps and what actions were performed", "team_actions": ["PM2-specific manual steps needed"], "severity_level": "LOW|MEDIUM|HIGH|CRITICAL based on PM2 app health and system doctor findings" } }

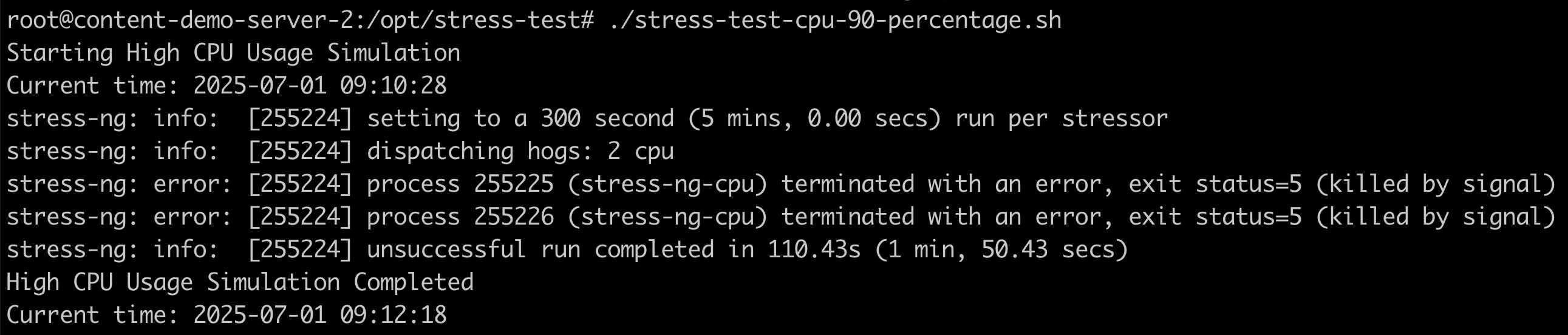

Example AI analysis during stress test:

{

"situation_assessment": {

"alert_vs_reality": "Current CPU usage is critically high at 85%, slightly lower than the original alert but still significant.",

"issue_status": "ONGOING",

"action_required": "CORRECTIVE",

"confidence_level": "MEDIUM",

"system_exit_code": "1",

"key_findings": [

"High CPU usage: 85%",

"Top CPU processes stress-ng-cpu",

"Large log and temporary files found: /tmp/auth.log (865MB), /tmp/auth.bin (865MB)"

],

"pm2_assessment": "No specific PM2 processes listed in the high CPU consuming processes, however, PM2 applications may still be indirectly affected due to overall CPU stress."

},

"targeted_actions": [

{

"action": "Terminate stress-ng processes to reduce CPU usage",

"command": "kill -9 245136 245137",

"target": "stress-ng-cpu processes",

"justification": "These processes are consuming excessive CPU resources (82.3%) which is contributing to the overall high load.",

"risk_level": "SAFE",

"expected_outcome": "For CPU usage to drop below critical levels, improving system responsiveness.",

"execution_order": 1

},

{

"action": "Clean up large temporary files",

"command": "find /tmp -name '*.log' -size +500M -delete",

"target": "/tmp/auth.log, /tmp/auth.bin",

"justification": "Large temporary files may be consuming disk space and can impact system performance.",

"risk_level": "SAFE",

"expected_outcome": "Free up resources and improve system performance.",

"execution_order": 2

}

]

}

Step 5: Safe Command Execution

The Dynamic SSH Command Executor validates each AI recommendation against safety rules:

// Enhanced safety validation function validateCommand(command, riskLevel) { const dangerousPatterns = [ 'rm -rf /', 'dd if=', 'mkfs', 'fdisk', 'shutdown', 'reboot', 'halt' ];const isDangerous = dangerousPatterns.some(pattern => command.toLowerCase().includes(pattern.toLowerCase()) );

if (isDangerous || riskLevel === 'RISKY') { return { safe: false, reason:

Blocked: ${command}}; } return { safe: true }; }

// Process and execute safe actions only const actionsToExecute = aiResponse.targeted_actions.filter(action => validateCommand(action.command, action.risk_level).safe );

Only SAFE and MODERATE risk commands execute automatically. RISKY commands require manual approval.

Step 6: Execution and Reporting

The final SSH node executes approved commands while Discord nodes report results:

For automatic fixes:

🔥 HighCPU Alert🖥️ Instance: 47.129.163.27:9100 🔴 Severity: CRITICAL 🚨 Active 📝 Details: High CPU usage detected at 92.37%. Immediate action taken to terminate high CPU consuming process. 🔧 Action: Executed targeted remediation actions

For notifications only:

🔥 HighCPU Alert

🖥️ Instance: 47.129.163.27:9100 🔴 Severity: LOW 🚨 Active 📝 Details: CPU usage was briefly high at 10.67%. Issue has resolved itself and is currently stable. 🔧 Action: Monitoring only - no intervention needed

Safety Mechanisms for Reliable DevOps Automation

The intelligent infrastructure implements comprehensive safety layers for reliable uptime protection:

- Command Pattern Blocking: Prevents destructive operations that could cause downtime

- Risk Level Assessment: SAFE/MODERATE/RISKY classification for DevOps automation

- Business Hours Consideration: Reduced automation during work hours unless uptime threatened

- Credential Mapping: Secure SSH access per server for reliable monitoring

- Execution Ordering: Prioritized command sequences for optimal uptime protection

Implementation Resources

Ready to implement this for your infrastructure?

Complete Workflow and Scripts

- n8n Workflow (JSON): https://github.com/Bubobot-Team/automation-workflow-monitoring/blob/main/n8n/n8n_AI_Agent_Decision_Engine_for_Self_Healing_Server_VPS.json

- System Health Scripts: https://github.com/Bubobot-Team/sysadmin-toolkit/blob/staging/scripts/system-health/system-doctor.sh

- Visual Workflow Diagram: https://github.com/Bubobot-Team/automation-workflow-monitoring/tree/main#ai-agent-decision-engine-for-self-healing-server-vps

Quick Setup Guide

- Deploy Prometheus + AlertManager stack

- Configure webhook routing to n8n

- Import workflow JSON into n8n

- Configure OpenAI API key and SSH credentials

- Install system-doctor.sh scripts on monitored servers

- Test with non-critical alerts first

Customization and Flexibility

This workflow is designed as a foundation template - adapt it to your infrastructure:

Decision Logic Options:

- Keep AI for intelligent analysis

- Replace with rule-based if/then logic (covered in Part 2)

- Use hybrid: AI analysis + deterministic decisions

- Integrate with your existing runbooks

Execution Layer Alternatives:

- SSH commands (current approach)

- Kubernetes API calls

- Docker container management

- Ansible playbook triggers

- Your existing automation tools

Limitations and Considerations

While this AI-driven approach offers powerful automation capabilities, it's important to understand its limitations:

Non-Deterministic Behavior:

- AI decisions may vary between identical scenarios

- Potential for "hallucinated" commands not in your approved set

- Unpredictable responses under edge cases

Data Privacy Concerns:

- System metrics and server information sent to cloud APIs

- Potential regulatory compliance issues for sensitive environments

- Third-party dependency for critical infrastructure decisions

Consistency Challenges:

- Different responses to similar alerts over time

- Difficulty in debugging AI decision logic

- Complex audit trails for compliance requirements

Coming in Part 2: Deterministic DevOps Automation for Uptime

For teams who prefer predictable, rule-based DevOps automation for uptime protection, Part 2 will demonstrate how to replace AI decision nodes with deterministic logic while maintaining the intelligent infrastructure analysis capabilities for proactive monitoring.

We'll cover rule-based decision trees, hybrid approaches combining AI analysis with deterministic execution, and production-hardened workflows that provide the consistency and predictability that enterprise uptime monitoring environments require.